Interpreting Coefficients of Logistic Regressions

01 Aug 2016

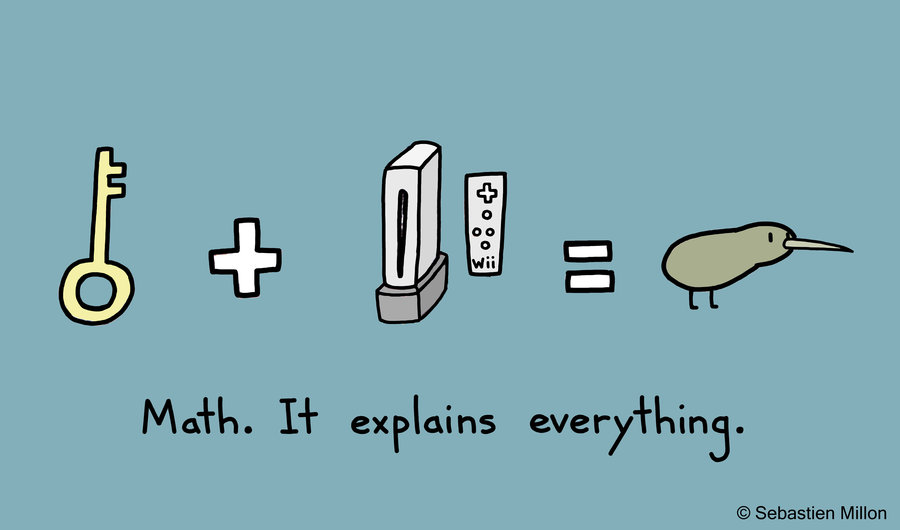

Giggles: Key + Wii = Kiwi; Math!

Dug out this relatively old notebook from a while ago when I was learning about logistic regression. We all know that the coefficients of a linear regression relates to the response variable linearly, but the answer to how the logistic regression coefficients related was not as clear.

If you’re also wondering the same thing, I’ve worked through a practical example using Kaggle’s Titanic dataset and validated it against Sklearn’s logistic regression library.

Table of Content

Quick Primer

Logistic Regression is commonly defined as:

You already know that, but with some algebriac manipulation, the above equation can also be interpreted as follows

Notice how the linear combination, , is expressed as the log odds ratio (logit) of , and let’s elaborate on this idea with a few examples.

Titanic Example

Kaggle is a great platform for budding data scientists to get more practice. I’m currently working through the Titanic dataset, and we’ll use this as our case study for our logistic regression.

Let’s load some python libraries to boot.

In [1]:

import pandas as pd

import matplotlib.pylab as plt

import numpy as np

%matplotlib inlineRead in our data set

In [2]:

train = pd.read_csv('train.csv')

train[['PassengerId', 'Survived', 'Name', 'Sex', 'Age']].head()| PassengerId | Survived | Name | Sex | Age | |

|---|---|---|---|---|---|

| 0 | 1 | 0 | Braund, Mr. Owen Harris | male | 22.0 |

| 1 | 2 | 1 | Cumings, Mrs. John Bradley (Florence Briggs Th... | female | 38.0 |

| 2 | 3 | 1 | Heikkinen, Miss. Laina | female | 26.0 |

| 3 | 4 | 1 | Futrelle, Mrs. Jacques Heath (Lily May Peel) | female | 35.0 |

| 4 | 5 | 0 | Allen, Mr. William Henry | male | 35.0 |

Coefficient of a Single Dichotomous Feature

Dichotomous just means the value can only be either 0 or 1, such as the field Sex in our titanic data set. In this section, we’ll explore what the coefficients mean when regressing against only one dichotomous feature.

Let’s map males to 0, and female to 1, then feed it through sklearn’s logistic regression function to get the coefficients out, for the bias, for the logistic coefficient for sex. Then we’ll manually compute the coefficients ourselves to convince ourselves of what’s happening.

In [3]:

train.Sex = train.Sex.apply(lambda x: 0 if x == 'male' else 1)

y_train = train.Survived

x_train = train.drop('Survived', axis=1)Fitting Against Sklearn

Sklearn applies automatic regularization, so we’ll set the parameter to a large value to emulate no regularization.

In [4]:

from sklearn.linear_model import LogisticRegression

clf = LogisticRegression(C=1e10) # some large number for C

feature = ['Sex']

clf.fit(x_train[feature], y_train)LogisticRegression(C=10000000000.0, class_weight=None, dual=False,

fit_intercept=True, intercept_scaling=1, max_iter=100,

multi_class='ovr', n_jobs=1, penalty='l2', random_state=None,

solver='liblinear', tol=0.0001, verbose=0, warm_start=False)

Now that we’ve fitted the logistic regression function, we can ask sklearn to give us the two terms in , namely the intercept and the coefficient

In [5]:

print('intercept:', clf.intercept_)

print('coefficient:', clf.coef_[0])intercept: [-1.45707193]

coefficient: [ 2.51366047]

Cool, so with our newly fitted , now our logistic regression is of the form:

or

Survival for Males

So, when , meaning , our equation boils down to:

Exponentiating both sides gives us:

Now let’s verify this ourselves via python.

In [6]:

survived_by_sex = train[train.Survived == 1].groupby(train.Sex).count()[['Survived']]

survived_by_sex['Total'] = train.Survived.groupby(train.Sex).count()

survived_by_sex['NotSurvived'] = survived_by_sex.Total - survived_by_sex.Survived

survived_by_sex['OddsOfSurvival'] = survived_by_sex.Survived / survived_by_sex.NotSurvived

survived_by_sex['ProbOfSurvival'] = survived_by_sex.Survived / survived_by_sex.Total

survived_by_sex['Log(OddsOfSurvival)'] = np.log(survived_by_sex.OddsOfSurvival)

survived_by_sex| Survived | Total | NotSurvived | OddsOfSurvival | ProbOfSurvival | Log(OddsOfSurvival) | |

|---|---|---|---|---|---|---|

| Sex | ||||||

| 0 | 109 | 577 | 468 | 0.232906 | 0.188908 | -1.457120 |

| 1 | 233 | 314 | 81 | 2.876543 | 0.742038 | 1.056589 |

As you can see, for males, we had 109 men who survived, but 468 did not survive. The odds of survival for men is:

And if we logged our odds of survival for men:

Which is almost identical to what we have also gotten from skearn’s fitting. In essence, the intercept term from the logistic regression is the log odds of our base reference term, which is men who has survived.

Survival for Females

Now that we understand what the bias coefficient means in the logistic regression. Naturally, adding gives us the survival probability if female.

Don’t take my words for it yet, we’ll verify that for ourselves.

In [7]:

survived_by_sex # same table as above| Survived | Total | NotSurvived | OddsOfSurvival | ProbOfSurvival | Log(OddsOfSurvival) | |

|---|---|---|---|---|---|---|

| Sex | ||||||

| 0 | 109 | 577 | 468 | 0.232906 | 0.188908 | -1.457120 |

| 1 | 233 | 314 | 81 | 2.876543 | 0.742038 | 1.056589 |

Out of 314 female on board, 233 survived, but 81 didn’t. So the odds of survival for females is:

Then taking the log both sides gives us:

And this is also what sklearn gave us as its from above.

Voila, nothing too ground breaking. Although it’s interesting to understand the relationship between and .

Where to Go from Here

If you’re still trying to make more connections to how the logistic regression is derived, I would point you in the direction of the Bernoulli distribution, how the bernoulli can be expressed as part of the exponential family, and how Generalized Linear Model can produces a learning algorithm for all members of the exponential family.

Thank you Sebastien for the wonderful cover art, more of his work can be found here.